Overview Tab

Overview Tab

The Overview tab offers a quick and consolidated view of a model’s status, metrics, and usage. It is available across all model types: Foundation Models, Fine-tuned Models, and Inference modules. While the details may differ slightly depending on the context, the structure remains consistent to ensure an intuitive experience.

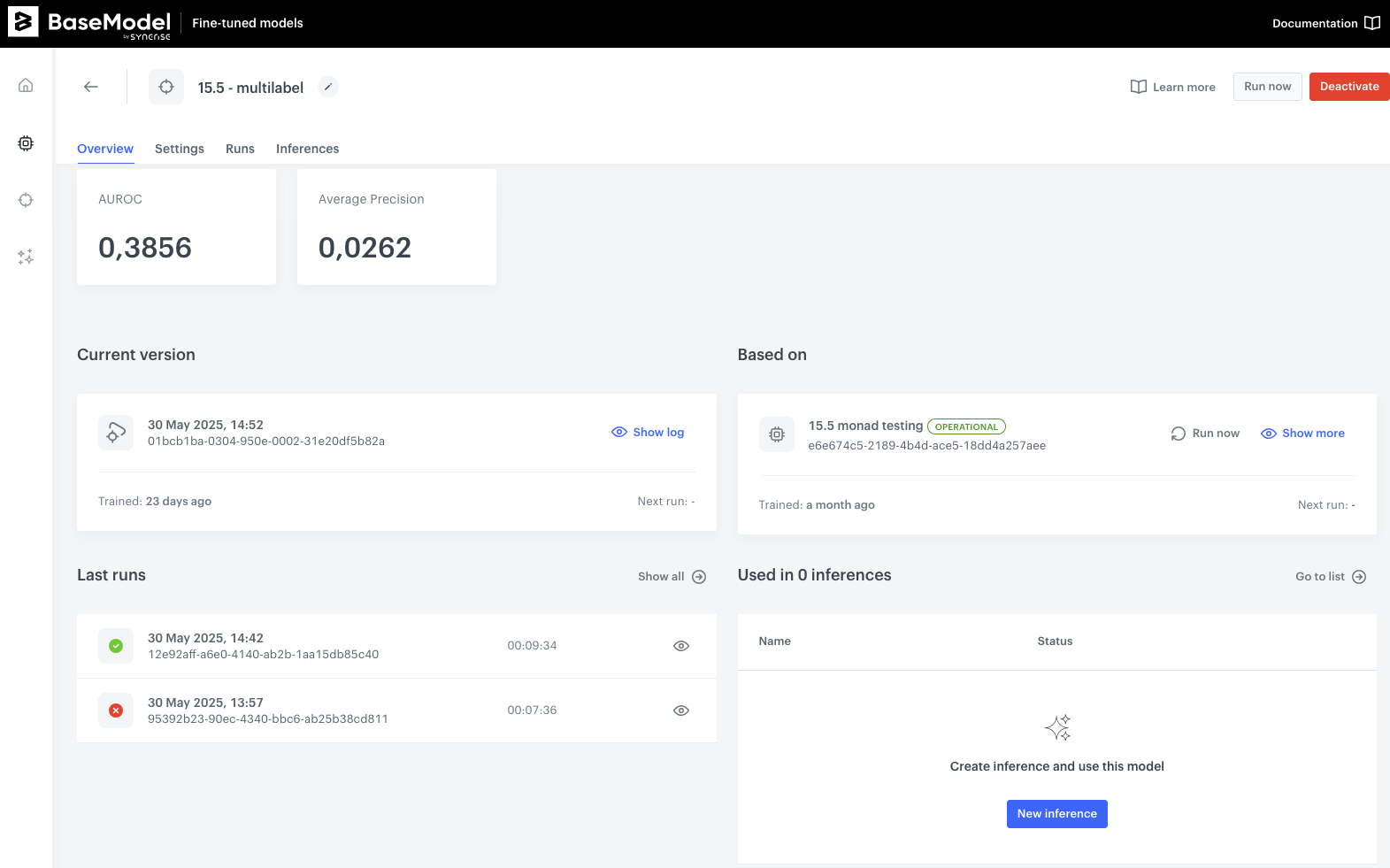

The view will look like this (in this example, Fine-tuned model):

Metrics Panel

At the top of the page, performance metrics are displayed. Depending on the model type, this may include:

- AUROC, Average Precision, or other evaluation metrics (for fine-tuned models)

- Number of Predictions (for inference models)

- A placeholder state when no metrics are available yet (e.g., after initial training)

Current Version

This section displays the most recent version of the model, including:

- Training timestamp

- Version identifier

- A quick link to the execution log via Show log

Last Runs

A chronological list of recent model runs with:

- Status indicator (green for success, red for failure)

- Execution timestamp

- Run duration

- Quick view option for individual run logs

Based On / Used In

Depending on the context:

- Foundation models display a list of fine-tuned models derived from them

- Fine-tuned models show the base foundation model they were trained on

- Inference modules reference the fine-tuned model they are serving

Output (Inference only)

Inference modules include an additional Output section, detailing where prediction results are written (e.g., a Snowflake table or external path).

Updated 9 months ago