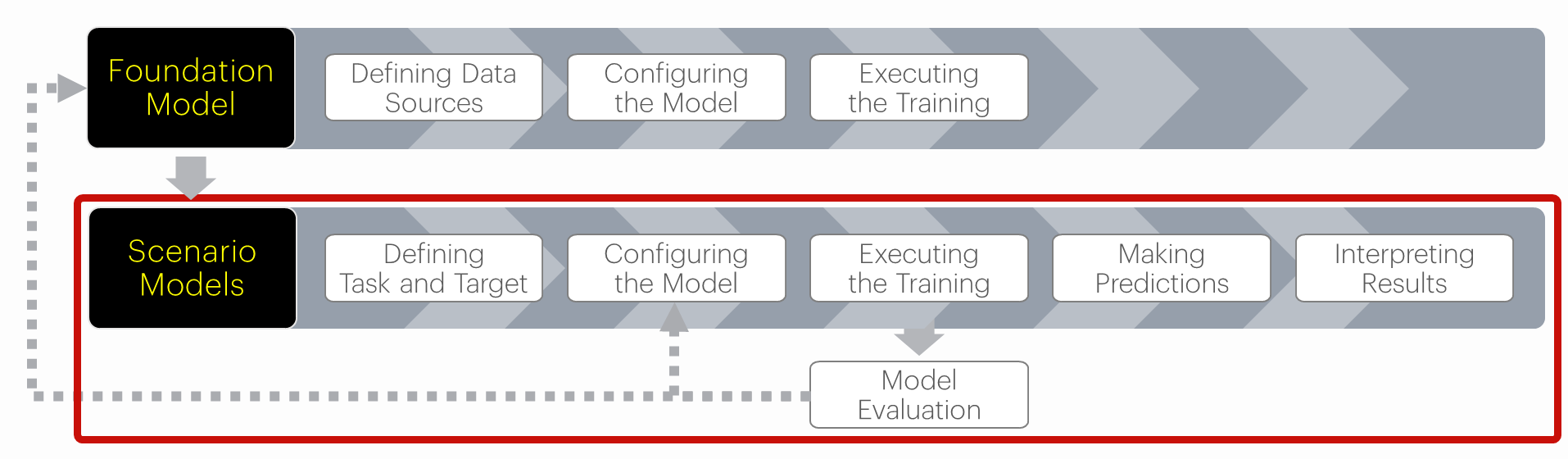

Scenario Model Stage Overview

The overview of the workflow

Check This First!This article refers to BaseModel accessed via Docker container. Please refer to Snowflake Native App section if you are using BaseModel as SF GUI application.

The Foundation Model is designed to understand the behavior and interactions between entities and develop a general predictive capability in the domain. During training, it doesn’t have a more specific goal than that.

To address a particular business problem, you need to create a downstream model tailored to the scenario, by fine-tuning the Foundation Model for the specific task.

In this article we focus on that step:

To build a downstream model for their particular business objective, the user needs to adapt the training script template, and execute the training using a Python function or via the command line.

Any scenario training script contains the following elements:

Import required classes and packages

Perform required imports, incl. the correct class for the task, aligned with your scenario:

from typing import Dict

from datetime import timedelta

import numpy as np

from monad.ui.config import TrainingParams

from monad.ui.module import MultilabelClassificationTask, load_from_foundation_model

from monad.ui.target_function import Attributes, Events, has_incomplete_training_window, SPLIT_TIMESTAMPTask definition

Perform required imports, incl. the correct class for the task, aligned with your scenario:

TARGET_NAMES = ["sony", "apple"]

TARGET_ENTITY = "brand"

task = MultilabelClassificationTask(class_names=TARGET_NAMES)Target definition

Define the target function, which will steer the loss calculation during model training:

def target_fn(_history: Events, future: Events, _entity: Attributes, _ctx: Dict) -> np.ndarray:

target_window = timedelta(days=21)

if has_incomplete_training_window(_ctx, target_window):

return None

future = future.interval_from(_ctx[SPLIT_TIMESTAMP], target_window)

purchase_target, _ = future["transactions"].groupBy(TARGET_ENTITY).exists(groups=TARGET_NAMES)

return purchase_targetInstantiating the trainer

Specify location of your source foundation model and instantiate the trainer, loading your foundation model with selected task and target function.

fm_path = "/path/to/your/fm"

trainer = load_from_foundation_model(

checkpoint_path=fm_path,

downstream_task=task,

target_fn=target_fn)Training configuration

Set and adapt, if required, the training parameters, incl. where to store your scenario model.

training_params = TrainingParams(

learning_rate=0.0001,

checkpoint_dir="/path/to/your/scenario/checkpoints",

epochs=3

)Model training

Train the model using the trainer's fit() method.

trainer.fit(training_params=training_params)Given the breadth of the material, it has been divided into two sections:

- Defining the Task and the Target:

- Identify the machine learning problem:

Determine the machine learning problem that aligns with the business objective. - Define the target function:

Specify the function that will guide the model's optimization process.

- Identify the machine learning problem:

- Setting up the learning process:

- Select the pre-trained Foundation Model:

Point the script to the directory containing the features of the pre-trained model. - Configure the modelling task:

Set up the downstream task and, if necessary, adjust the training parameters. - Instantiate the trainer:

Create an instance of the trainer and, if needed, modify the loading process.

- Select the pre-trained Foundation Model:

Follow the links above, or proceed to the following articles for detailed explanations of the above tasks.

Updated about 2 months ago