Requirements

What do you need to comfortably use BaseModel?

Hardware and Data Requirements

In order to effectively and efficiently utilize BaseModel you need sufficient amount of behavioral data and adequately powerful infrastructure.

Data

-

Minimum requirements:

- At least 10k individual behavioral profiles (eg. customers, subscribers, members etc.) They will act as the main entity and level of the model's predictions,

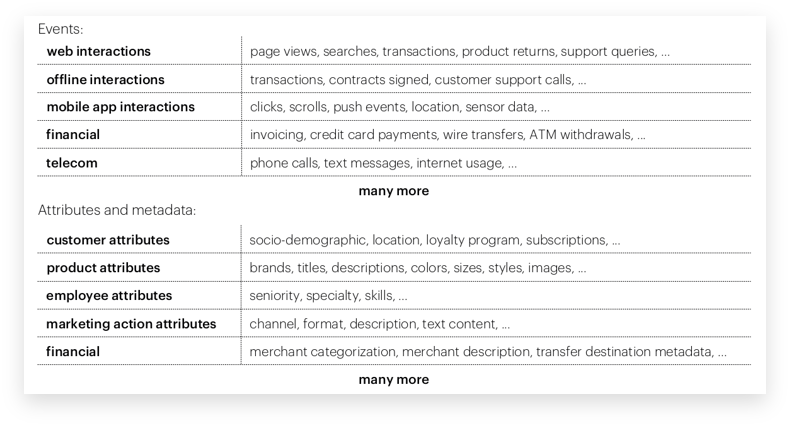

- One or more event data sources containing behavioral data. These are interactions between the main entity and other entities (eg. products, services, stores etc.)

- All records in event data sources must have entity ID and timestamp of the event.

- There should be at least 100k interactions observed per month, tied to behavioral profiles.

-

Recommended information and data volume:

- At least 3 months' worth of event data for industries where interactions are daily, such as banking, telco, FMCG retail.

- At least 1 year's worth of data for industries where the interactions are less frequent, eg. fashion, insurance, automotive.

- Attributes related to behavioral profile and other entities (see below).

Hardware

- An environment capable of running Docker containers

- A100 GPU or better with CUDA 11.7 or higher

- Minimum of 240 GB RAM

- At least 32 CPU cores

- 1 TB hard disk space

Example infrastructure and illustrative performance

Our proprietary algorithms - Cleora and emde - enable a notably efficient and fast training process, allowing to process vast amount of behavioral data. Below is a example of an end-to-end cycle of foundation model and downstream model training based on a real-world retail customer which should help to assess resource requirements and expected costs in your case:

Data Size

- Ca. 8 Bn events

- Ca. 18 Mn unique Client IDs

- Ca. 1 Mn unique Product IDs

Performance

- Self-supervised foundation model training: 12h @ 1x Nvidia A100

- Full (non-LoRA) fine-tuning for a supervised task (brand propensity for 16,000 brands): 10h @ 1x Nvidia A100

- Full model (non-quantized) inference throughput: 2718 clients / sec per 1 GPU

- Training and inference scale linearly with the number of GPUs.

If necessary, additional optimizations may be applied depending on customer needs, at the cost of slightly impaired model quality:

- Low rank fine-tuning,

- Model quantization for inference.

Updated 12 months ago